On-prem deployment using VeridiumInstaller (RHEL8 - 11.1.10)

The following procedure will describe how to deploy VeridiumID on RedHat 8 using the VeridiumInstaller.

1) Pre-requirements

1.1) OS level requirements

All nodes need to have firewall stoped : run with sudo : systemctl stop firewalld

All nodes used in the deployment procedure will need to have the same user name that needs to:

Have SSH connectivity to all nodes using SSH keys

## generate key (can be generated on all servers)

ssh-keygen

## take the key (id_rsa.pub) from on server, from where the installation will take place, and put it on all other servers (including himself) in .ssh/authorized_keys

cat .ssh/id_rsa.pub

vi .ssh/authorized_keys

## paste at the end the keySudo permissions (at least until the end of the deployment)

1.2) Pre-required RPMs

The following list of packages must be installed from official repositories on all nodes in order to be able to use VeridiumID, the following command can be run as root or an username with sudo:

sudo yum -y install vim apr-devel openssl-devel libstdc++-devel curl unzip wget zlib zlib-devel nc openssh-clients perl rsync chrony python39 python39-pip net-tools dialog jq rng-tools tmux tcpdumpInstall Java 11 and Python3 version, using the following command as root or an username with sudo:

sudo yum -y install java-11-openjdk

##set default java to be java-11 on all machines

JAVA_VERS=`ls /usr/lib/jvm | grep "^java-11-openjdk-11" | tr -d [:space:]` && sudo alternatives --set java "/usr/lib/jvm/${JAVA_VERS}/bin/java"

##check java version, that is 11

java -version

##output example: openjdk version "11.0.22" 2024-01-16 LTS

python3 --version

##if version is not Python 3.9.X, please run the the following command and select python version 3.9.X

sudo alternatives --config python3

## it still the version is not correct, please run the following:

sudo ln -sf /usr/bin/python3.9 /usr/local/bin/python3

On RedHat to activate the official repositories, the nodes will need to subscribe. Please use the following command as root:

sudo subscription-manager register --username <username> --password <password> --auto-attach2) Deployment steps

2.1) Getting the VeridiumInstaller archive on the node

Using SCP copy the VeridiumInstaller archive to the home directory of the user previously mentioned on the node from where the installation will be started and unarchive the file.

tar xvf veridium-installer-rhel8-x.x.x.tar.gz2.2) Run Pre-requirements checks, to check if components are installed

Run the script with the user that created the keys. This needs to be executed on one machine by providing the internal IP addresses of all nodes that will be part of the deployment.

./check_prereqs_rhel8.sh -r -w IP1,IP2 -p IP3,IP4,IP52.3) Running the pre-reqs script to install required packages

From the current directory simply run the pre-reqs-rhel9.sh script (without providing any parameter).

./pre-reqs-rhel8.shThe pre-reqs-rhel8.sh script will do the following:

check the SSH connectivity from the current node to all nodes provided in the list

install all packages required

install Python 3.9 and all its required modules

install the VeridiumID layout including the veridiumid user (which will be used further in the installation)

configure SSH connectivity and sudo permissions for the veridiumid user (both can be removed after the installation has been completed)

move all installation packages to veridiumid user’s home directory (/home/veridiumid)

check if all nodes have dates synced using an NTP server

Please configure chrony with your local NTP server, so there will be no issues with the time.

sudo vi /etc/chrony.conf

sudo systemctl status chronyd

sudo systemctl enable chronyd; sudo systemctl start chronyd

date

sudo chronyc -a sources

## check also if localzone is defined fine

timedatectl

## also set timedate zone

timedatectl list-timezones | grep Bucharest

## sudo timedatectl set-timezone Europe/Berlin

##Also, if the instalation is done in /u01, the following needs to be executed; this is for specific clients, with different defualt user home.

##sudo semanage fcontext -a -e /home /u01/users

##sudo restorecon -vR /u01/users

2.4) Run the VeridiumInstaller

All further steps will be performed using the veridiumid user and from the user’s home directory (/home/veridiumid).

2.4.1) Running the VeridiumInstaller script

Run the following script as veridiumid user to start the configuration of VeridiumID:

./veridium-installer-rhel8.shThis script will start the installation and Configuration of the VeridiumID deployment and guide the user through the installation wizard. PLEASE read carefully the following information:

###########################

1. If you want to use one domain, set both INTERNAL and EXTERNAL domain with the same value.

2. if you choose ports, then the following entries are necessary in your DNS:

## INTERNAL SANs: veridium.client.local

## EXTRENAL SANs: veridium.client.com

3. if you choose FQDN, then the following entries are necessary in your DNS:

## INTERNAL SANs: admin-veridium.client.local, ssp-veridium.client.local, shib-veridium.client.local, veridium.client.local

## EXTRENAL SANs: ssp-veridium.client.com, ssp-veridium.client.com, dmz-veridium.client.com, veridium.client.com

2.4.2) Provide deployment details using the Installation Wizard

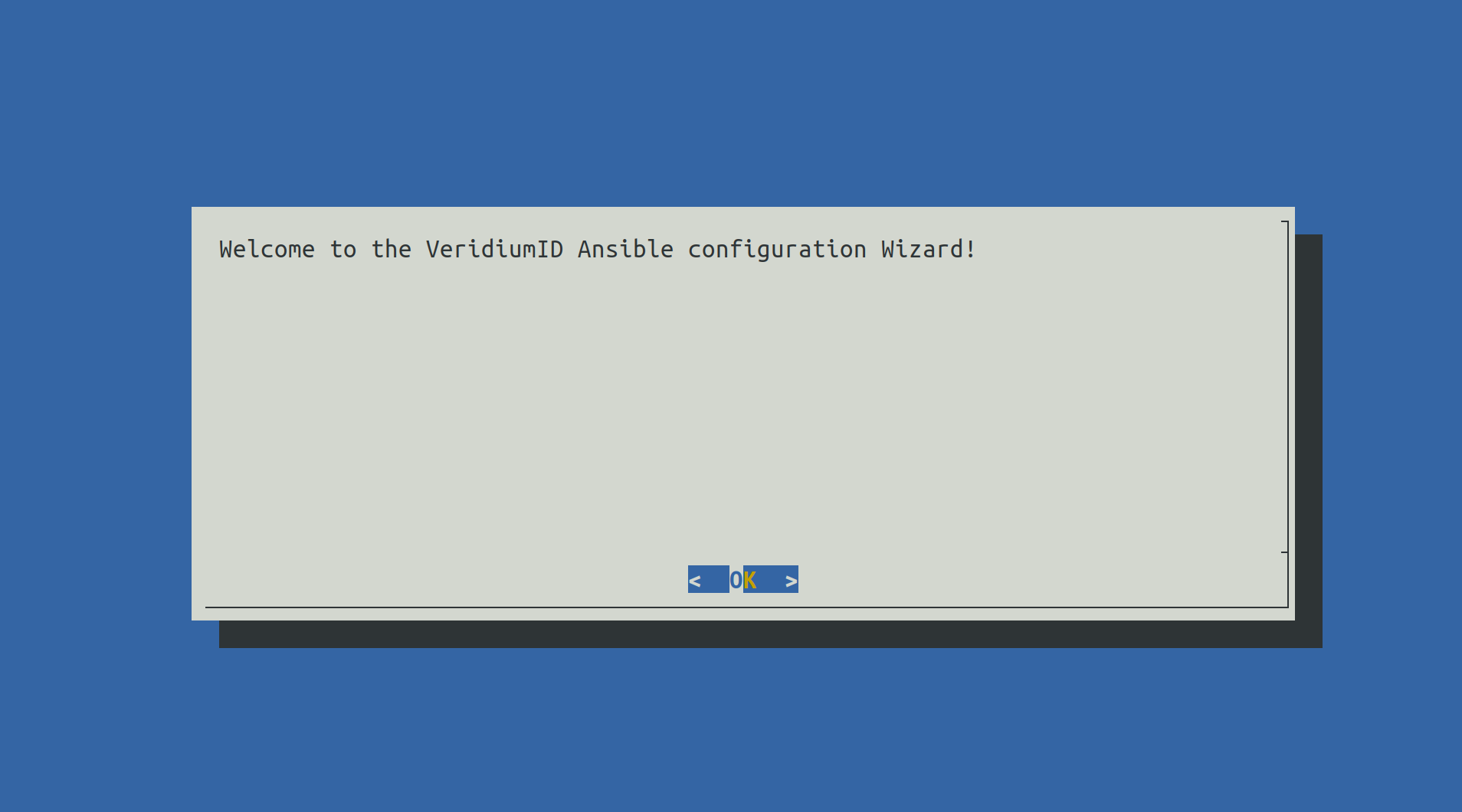

When the installation wizard will start the following screen will be seen in the terminal:

Press Enter to proceed.

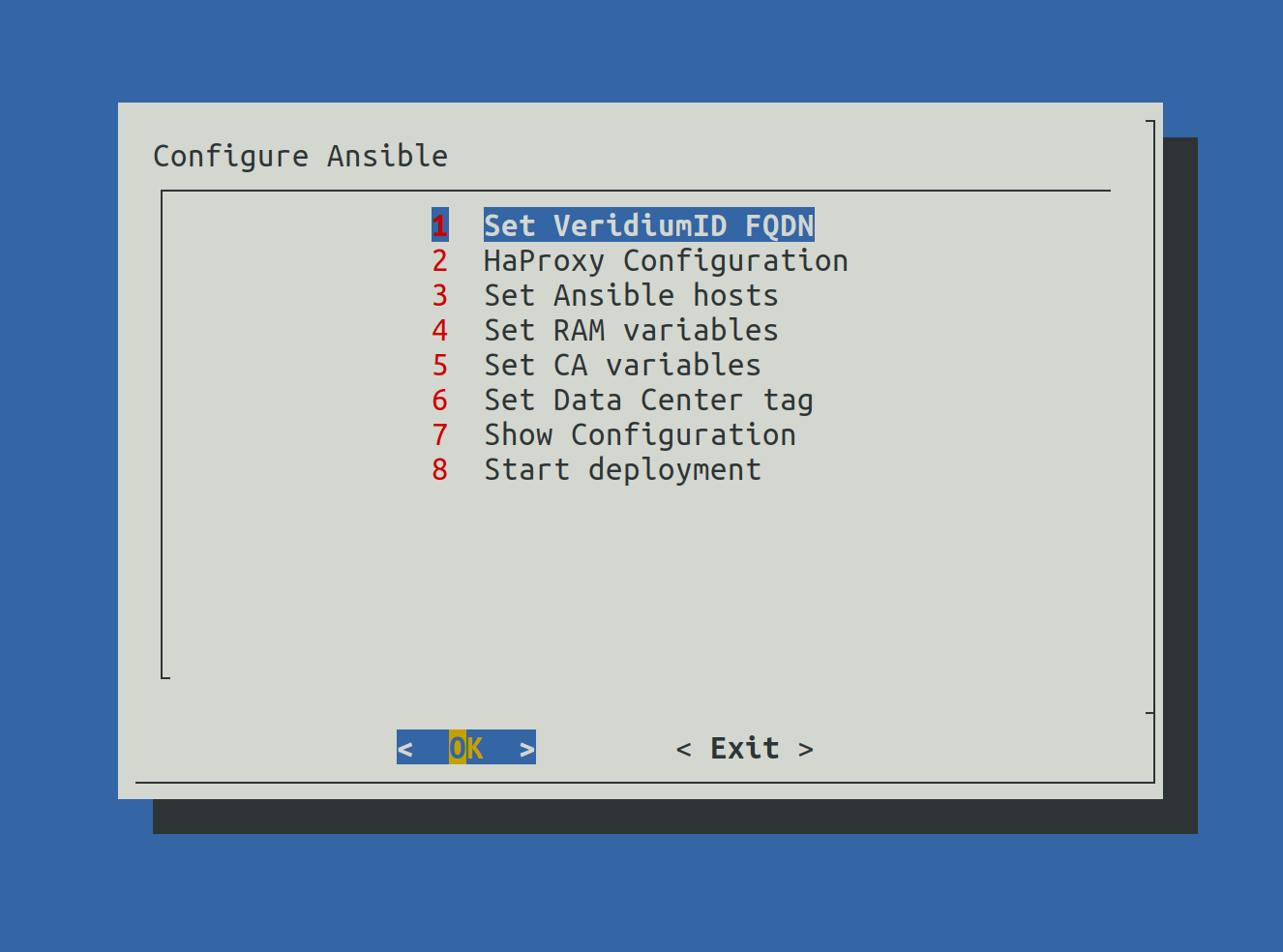

All steps from the Menu will be detailed in the following steps.

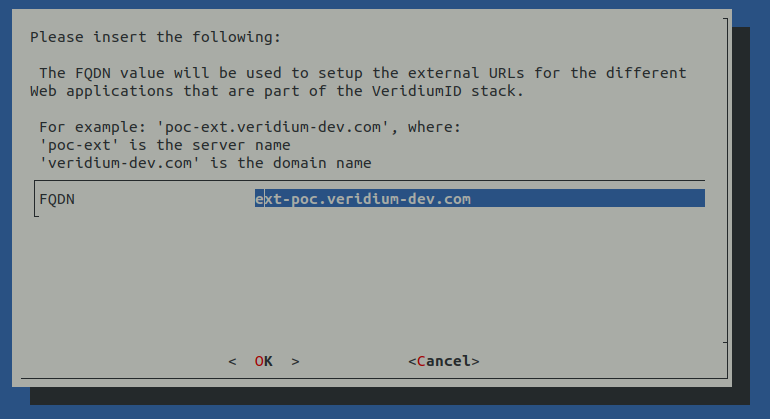

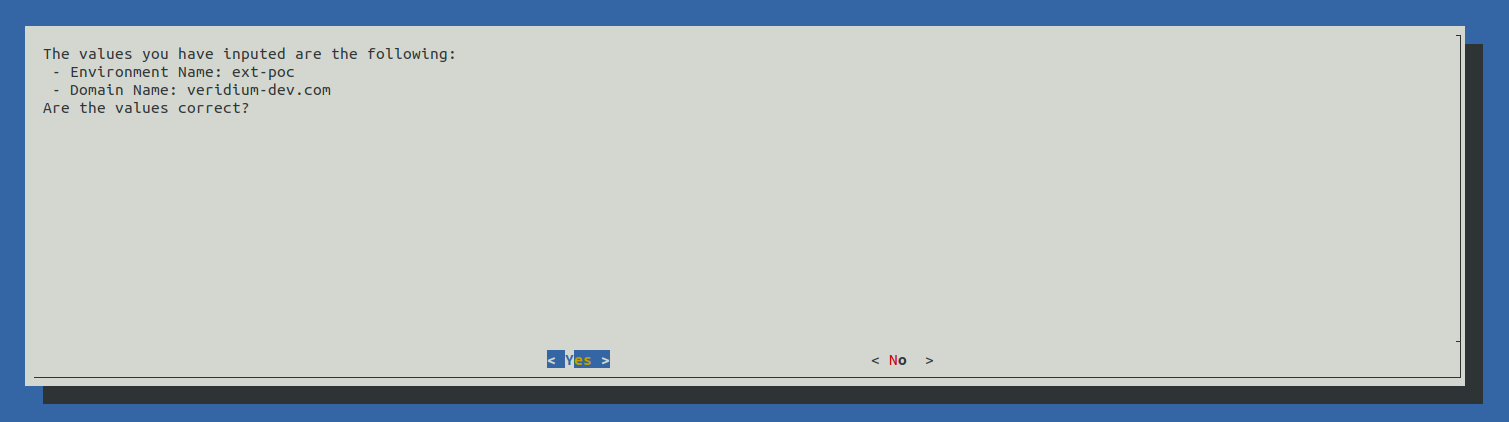

2.4.2.1) Set VeridiumID FQDN - First time INTERNAL and second time EXTERNAL.

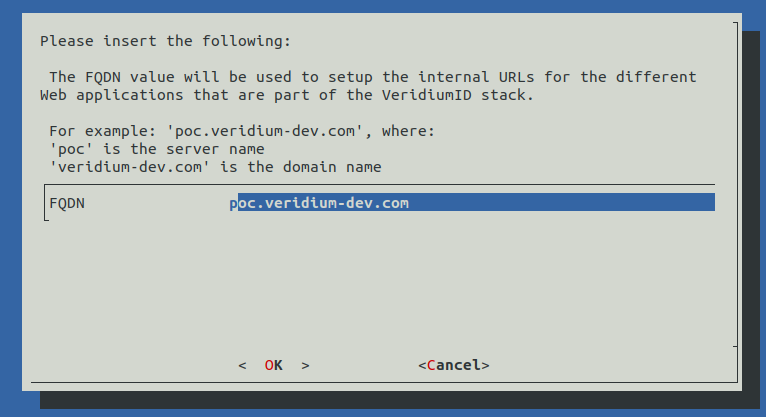

When selecting Set VeridiumID FQDN the following screen will be shown:

Write the correct INTERNAL FQDN in place of poc.veridium-dev.com and press the TAB key to go to OK and press Enter to continue.

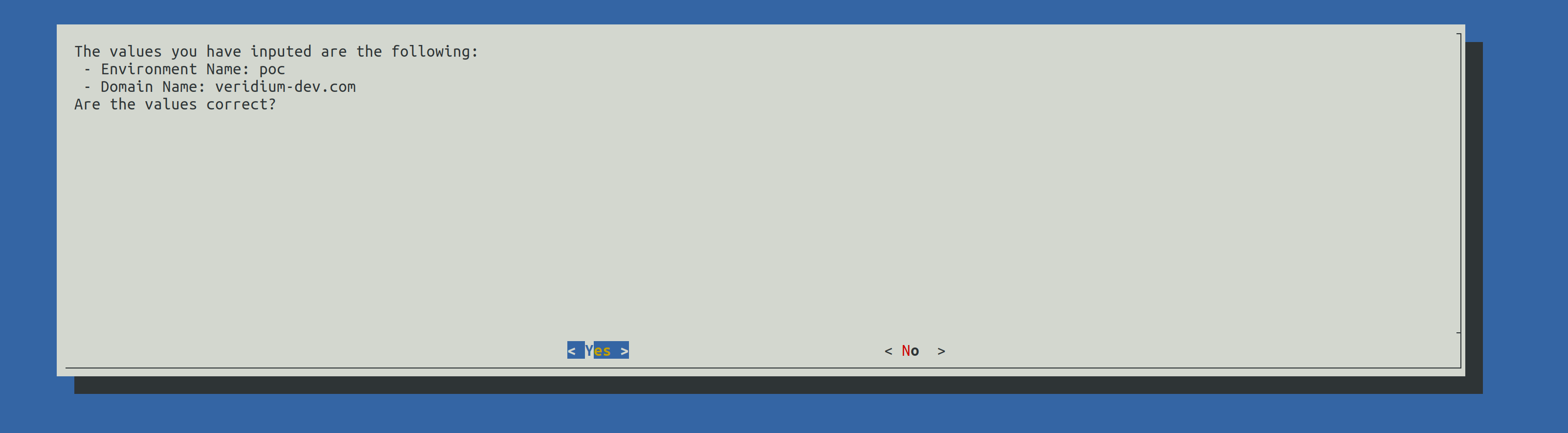

A validation of the FQDN will be prompted. Press Enter to continue.

Write the correct EXTERNAL FQDN in place of ext-poc.veridium-dev.com and press the TAB key to go to OK and press Enter to continue.

A validation of the FQDN will be prompted. Press Enter to continue.

2.4.2.2) HaProxy configuration

There are two configuration for HaProxy:

SNI: all component URLs will be composed from the base FQDN (the one provided during step 2.3.3.1) along side the components name, for example:

for the Admin Dashboard : admin-intFQDN

for the Self Service Portal: ssp-intFQDN

for the Websec API: intFQDN

for Shibboleth Internal (Identity Provider): shib-intFQDN

for Shibboleth External (Identity Provider): shib-extFQDN

for the Self Service Portal: ssp-extFQDN

for the DMZ Websec API: dmz-extFQDN

for the Websec API: extFQDN

Ports: all component URLs will be composed from the base FQDN and a different port for each component, for example:

for the Admin Dashboard: intFQDN:9444

for the Self Service Portal: intFQDN:9987

for the Websec API: intFQDN:443

for internal Shibboleth (Identity Provider): intFQDN:8945

for the Self Service Portal: extFQDN:9987

for the Websec API: extFQDN:443

for external Shibboleth (Identity Provider): extFQDN:8944

for the DMZ Websec API: extFQDN:8544

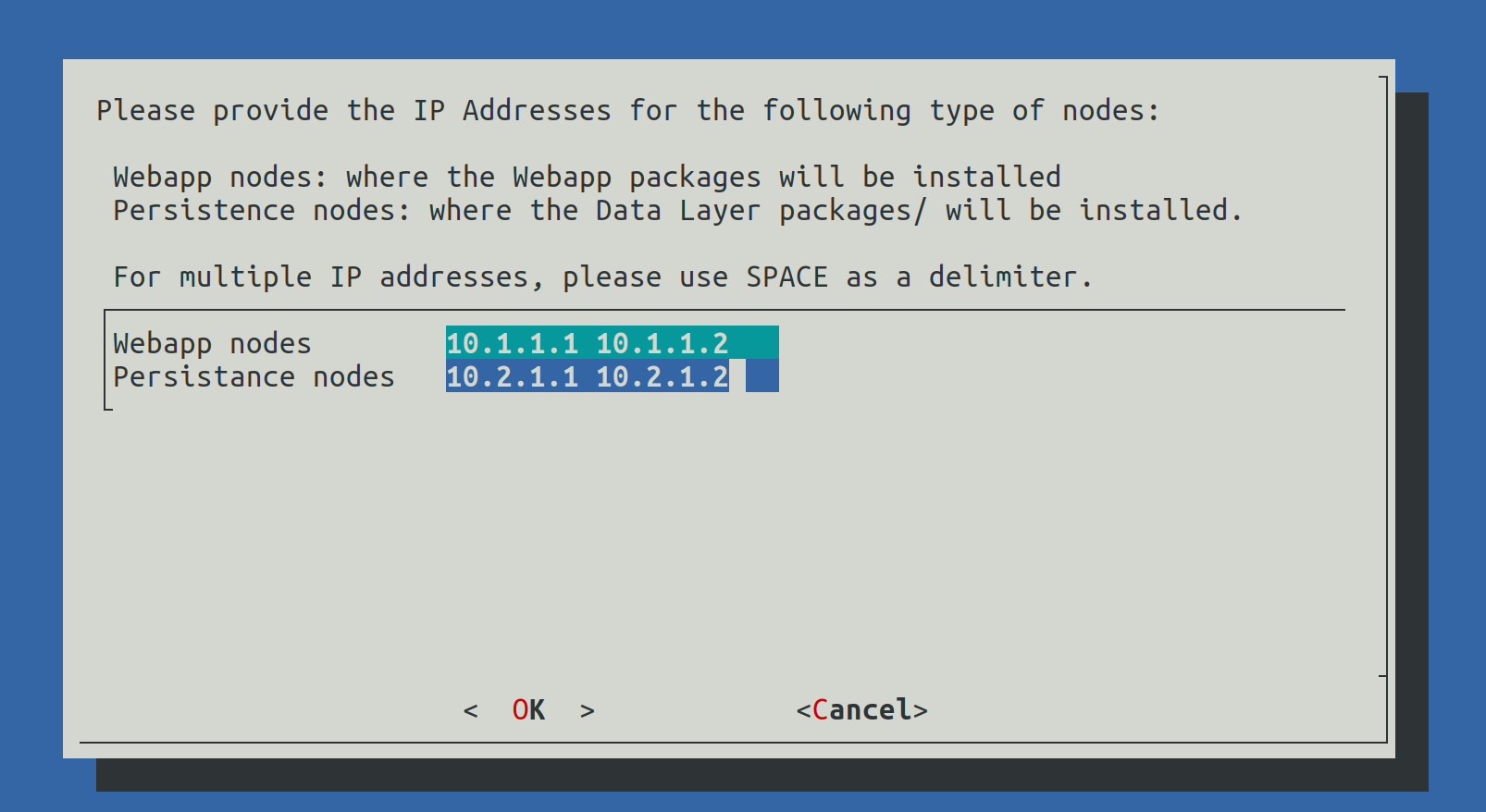

2.4.2.3) Set Ansible Hosts

In this step the select from the list of IP addresses which will be Webapplication nodes and which will be Persistence node (Data nodes).

The list of nodes must be delimitted by Spaces.

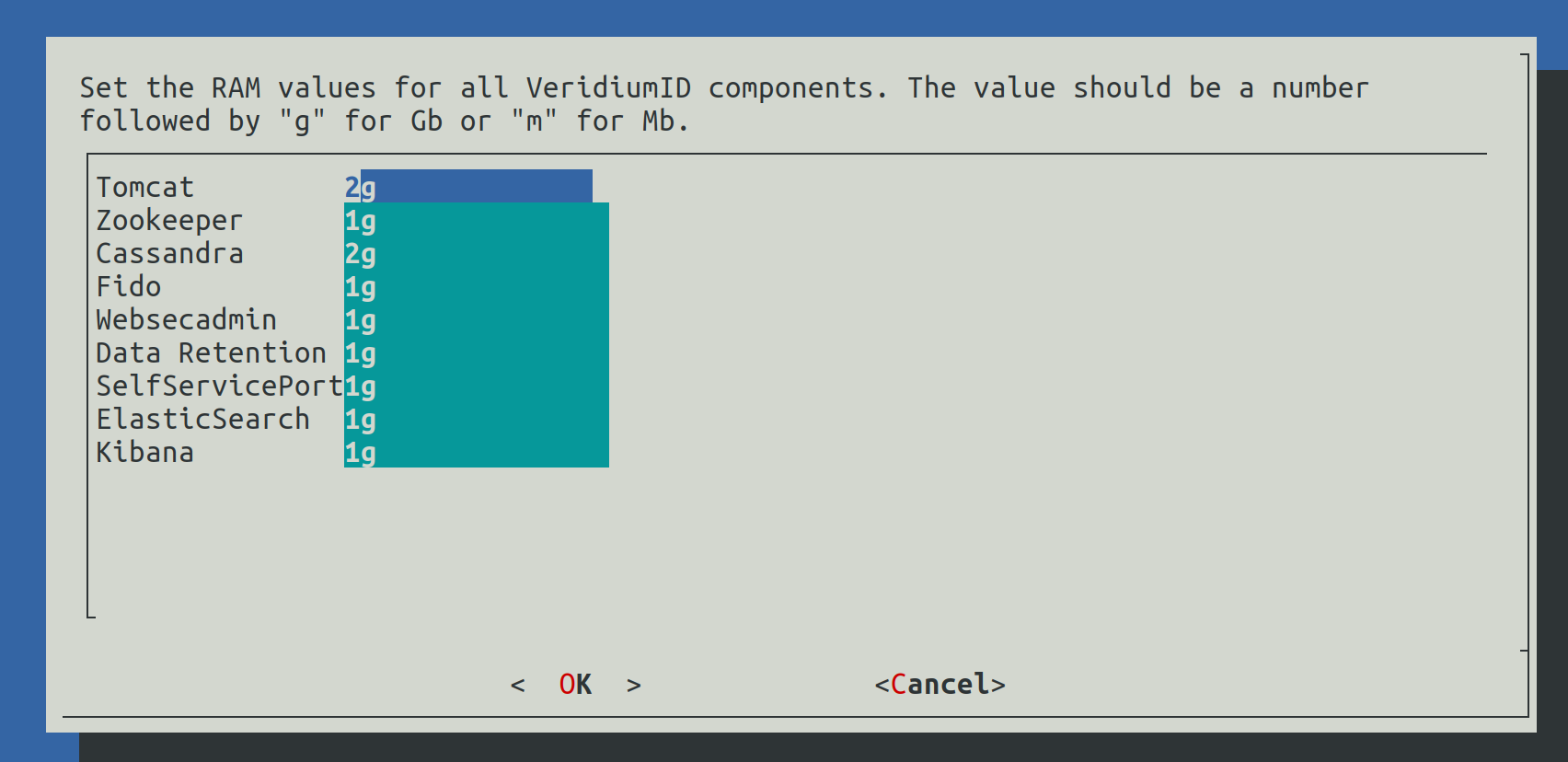

2.4.2.4) Set RAM values

In this step the RAM values for different components will be configured. The value must contain a number followed by g for Gb or m for Mb.

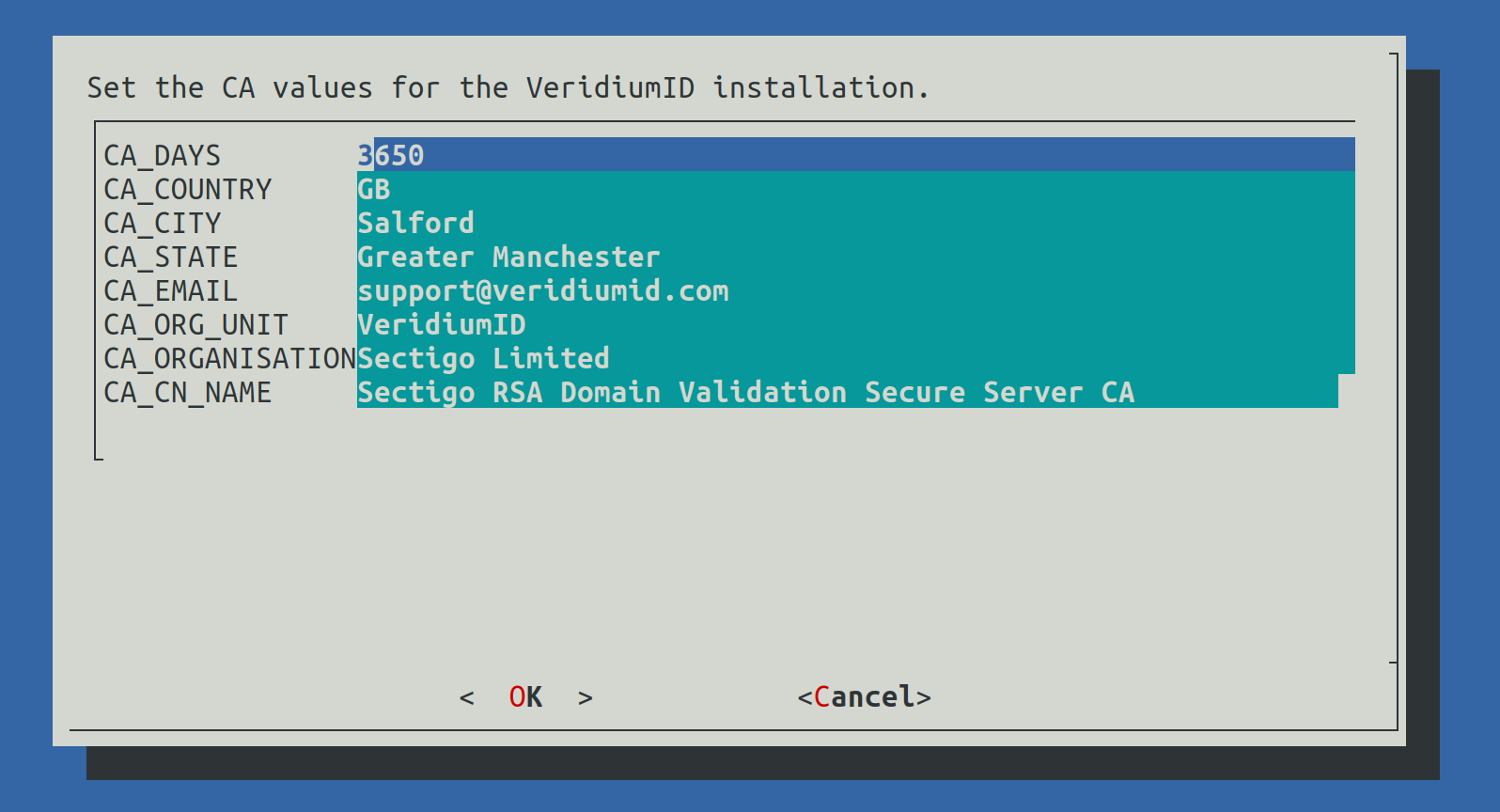

2.4.2.5) Set CA variables

This step will configure the values used for the internal Certification Authority of the VeridiumID deployment (used to generate internal certificates).

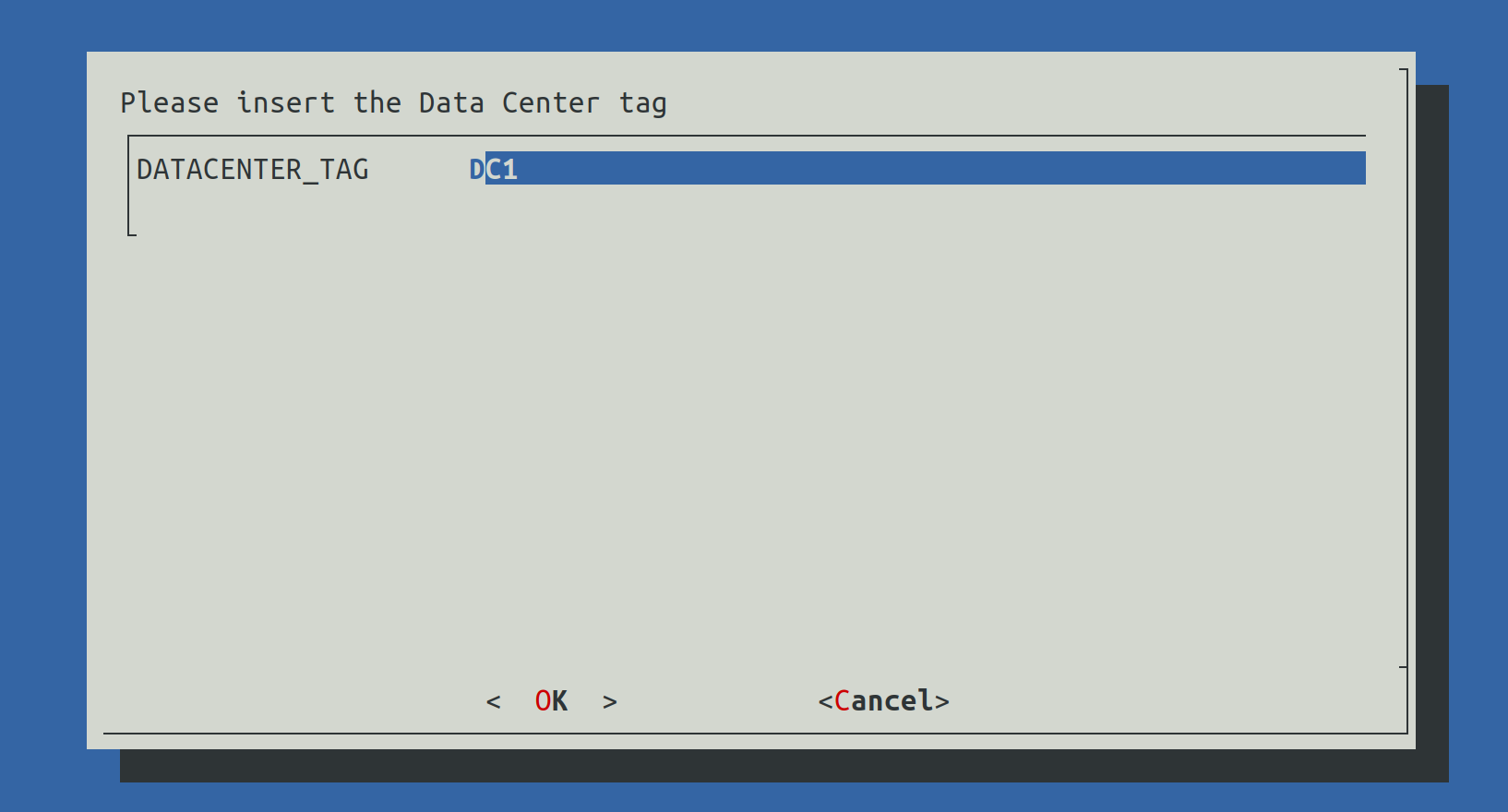

2.4.2.6) Set Data Center Tag

This value will be used to define the name of the current data center.

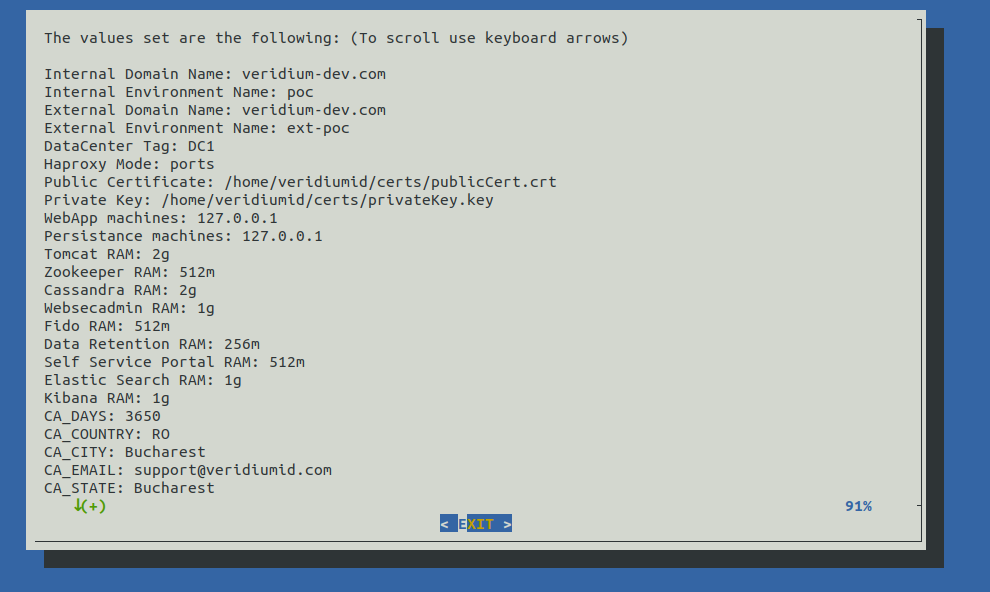

2.4.2.7) Show configuration

In this step we can verify all value provided earlier to validate them.

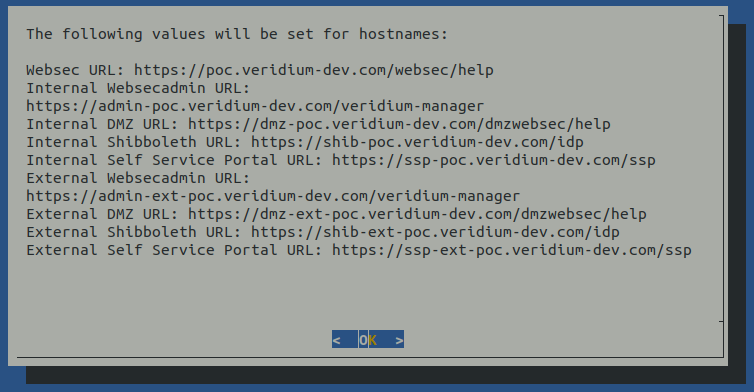

2.4.2.8) Start deployment

This step will close the Installation Wizard and provide the list of FQDNs:

The values can be viewed later in the following file: /home/veridiumid/host_list.txt

After exiting the Installation Wizard, the deployment process will start by:

Checking the SSH connectivity from this node to all other nodes

Install specific VeridiumID components for Webapplication and Persistence nodes

Start the Ansible configuration

User veridiumid may run the following commands on the machine.

veridiumid ALL=(root) NOPASSWD: /bin/systemctl stop ver*

veridiumid ALL=(root) NOPASSWD: /bin/systemctl start ver*

veridiumid ALL=(root) NOPASSWD: /bin/systemctl enable ver*

veridiumid ALL=(root) NOPASSWD: /bin/systemctl disable ver*

veridiumid ALL=(root) NOPASSWD: /bin/systemctl restart ver*

veridiumid ALL=(root) NOPASSWD: /bin/systemctl status ver*

veridiumid ALL=(root) NOPASSWD: /sbin/service ver* stop

veridiumid ALL=(root) NOPASSWD: /sbin/service ver* start

veridiumid ALL=(root) NOPASSWD: /sbin/service ver* restart

veridiumid ALL=(root) NOPASSWD: /sbin/service ver* status

veridiumid ALL=(root) NOPASSWD: /usr/bin/crontab -l

veridiumid ALL=(root) NOPASSWD: /usr/bin/crontab -e

veridiumid ALL=(root) NOPASSWD: /usr/bin/less /var/log/messages

veridiumid ALL=(root) NOPASSWD: /sbin/tcpdump

veridiumid ALL=(root) NOPASSWD: /bin/bash /etc/veridiumid/scripts/*

veridiumid ALL=(root) NOPASSWD: /bin/python3 /etc/veridiumid/scripts/*2.5) Cleanup installation files

In veridiumid user’s home directory the following script will take care of cleaning up installation files (in case of needing the same machine to deploy another environment or redeploying the environment).

Run the following command as veridiumid user:

# To remove local installation files (in order to deploy on a new environment):

./cleanup_install_files_rhel8.sh

# To redeploy the same environment (requires to remove the deployment's CA directory)

./cleanup_install_files_rhel8.sh -cIn order to redeploy the same environment, after using the cleanup command (with CA directory included) the following must be performed as well:

Connect to all webapp nodes and stop all services running the following command as root:

- CODE

bash /etc/veridiumid/scripts/veridium_services.sh stop

Connect to a persistence node and do the following:

Remove Zookeeper data using the following command as root:

- CODE

/opt/veridiumid/zookeeper/bin/zkCli.sh # And after accessing the Zookeeper command line deleteall veridiumid # To exit quit

Remove Cassandra keyspace using the following command as root:

- CODE

/opt/veridiumid/cassandra/bin/cqlsh --cqlshrc=/opt/veridiumid/cassandra/conf/veridiumid_cqlshrc --ssl -e 'drop keyspace veridium;' #(even it received timeout, it should be deleted; check with the following command; the veridium keyspace should not exists) /opt/veridiumid/cassandra/bin/cqlsh --cqlshrc=/opt/veridiumid/cassandra/conf/veridiumid_cqlshrc --ssl -e 'desc keyspaces;'

Connect to all persistence nodes and stop all services running the following command as root:

- CODE

bash /etc/veridiumid/scripts/veridium_services.sh stop