Disaster recovery documentation

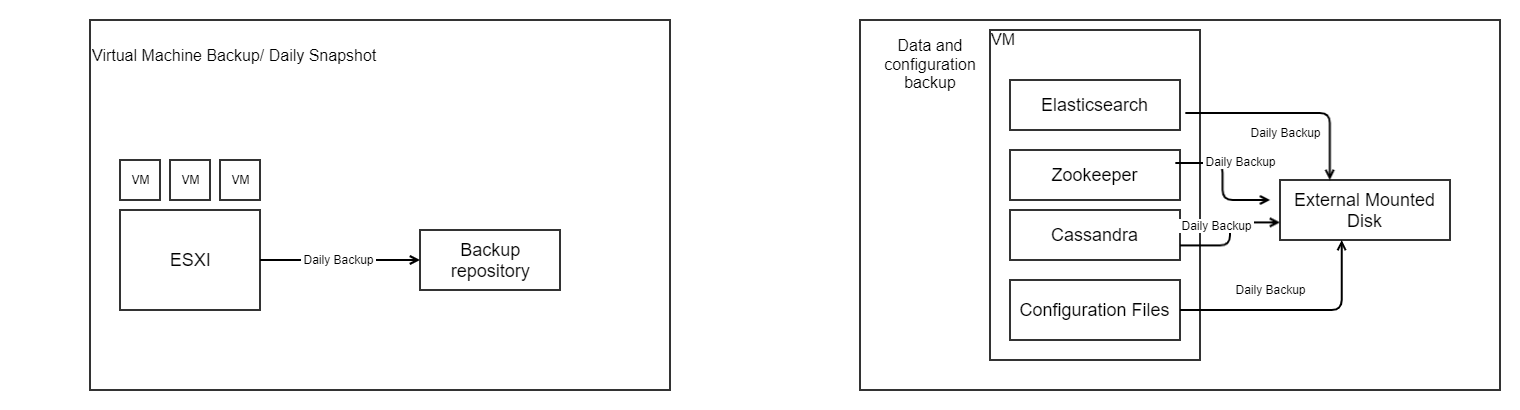

1) VM Snapshots

Client should create VM snapshots from VM solution for all servers, periodically and save them to a different storage. This should be configured as per client backup policy.

Recommended is to do backup each day and keep the last 2 backups.

Restore can be done from the snapshots.

2) Data and configuration backup

Veridium has the following components, for which backups are done:

cassandra - retention default 2 backups, done each week.

elasticsearch - backup incremental.

zookeeper - backup weekly, 3 backups are kept

configuration data - main information files, in order to restore specific configuration (certificates, etc).

It is recommended that backup data should be mounted on a different disk, not a disk from the same machine. The default path where backups are kept is /opt/veridiumid/backup/. The path can be changed in configuration files.

Restore can be done from individual backups.

Data and configuration backup - details

Node Type | Backups performed |

|---|---|

Persistence node | Cassandra backup ElasticSearch backup Configuration backup |

Webapp nodes | Zookeeper backup Configuration backup |

1) Zookeeper backup

This process will download the content of Zookeeper and preserve it on the disk.

| Details |

|---|---|

Script location | /etc/veridiumid/scripts/zookeeper_backup.sh |

Configuration file | Does not use a configuration file |

Cronjob default time | 0 2 * * 6 (Saturdays at 02:00) |

Log file | /var/log/veridiumid/ops/zookeeper_backup.log |

Number of backups maintained | 5 |

Backup location | /opt/veridiumid/backup/zookeeper |

Backup name | zookeeper_backup_YYYY-MM-DD-HH-mm-ss |

Changing the number of maintained backups:

To change the limit you can run the following command:

sed -i "s|LIMIT=.*|LIMIT=<NEW_LIMIT>|g" /etc/veridiumid/scripts/zookeeper_backup.sh

Where:

<NEW_LIMIT> is the new number of backups that will be kept on the diskPerforming a backup:

Run the following command to perform a Zookeeper backup:

bash /etc/veridiumid/scripts/zookeeper_backup.shRecover from backup:

To recover the JSON files from a backup run the following command:

bash /opt/veridiumid/migration/bin/migration.sh -u PATH_TO_BACKUP

Where:

PATH_TO_BACKUP is the fullpath to the Zookeeper backup directory, for example: /opt/veriidumid/backup/zookeeper/zookeeper_backup_2023-06-17-02-00-012) Cassandra backup

This process will make a copy of existing Cassandra keyspaces and their configuration and preserve them on the disk.

| Details |

|---|---|

Script location | /opt/veridiumid/backup/cassandra/cassandra_backup.sh |

Configuration file | /opt/veridiumid/backup/cassandra/cassandra_backup.conf |

Cronjob default time | 0 4 * * 6 (Saturdays at 04:00) |

Log file | /var/log/veridiumid/cassandra/backup.log |

Number of backups maintained | 3 |

Backup location | /opt/veridiumid/backup/cassandra |

Backup name | YYYY-MM-DD_HH-mm |

Configuration file:

The configuration file contains the following:

CASSANDRA_HOME="/opt/veridiumid/cassandra"

CASSANDRA_KEYSPACE="veridium"

SNAPSHOT_RETENTION="3"

BACKUP_LOCATION="/opt/veridiumid/backup/cassandra"

LOG_DIRECTORY="/var/log/veridiumid/cassandra"

USER="veridiumid"

GROUP="veridiumid"Changing the number of maintained backups:

To change the number of maintained backup change the value of SNAPSHOT_RETENTION in the configuration file.

Performing a backup:

Run the following command as root user to perform a Cassandra backup:

bash /opt/veridiumid/backup/cassandra/cassandra_backup.sh -c=/opt/veridiumid/backup/cassandra/cassandra_backup.confRecover from backup:

Stop webapplications on all webapp nodes:

- CODE

# Connect to all webapp nodes and run the following command as root: bash /etc/veridiumid/scripts/veridium_services.sh stop

Recreate the Cassandra keyspace

- CODE

# Run this command on a single persistence node as root: python3 ./cassandra-restore.py --debug --backup BACKUP --create # Where BACKUP is the full path to the backup directory, for example: /opt/veridiumid/backup/cassandra/2022-05-04_12-51

Restore the data from the backup

- CODE

# Run this command on all persistence nodes as root (can be run in parallel): python3 ./cassandra-restore.py --debug --backup BACKUP --restore # Where BACKUP is the full path to the backup directory, for example: /opt/veridiumid/backup/cassandra/2022-05-04_12-51

Rebuild indexes

- CODE

# Run this command on all persistence nodes as root (can be run in parallel): python3 ./cassandra-restore.py --debug --backup BACKUP --index # Where BACKUP is the full path to the backup directory, for example: /opt/veridiumid/backup/cassandra/2022-05-04_12-51

Start web applications on all webapp nodes

- CODE

# Connect to all webapp nodes and run the following command as root: bash /etc/veridiumid/scripts/veridium_services.sh start

Repair the Cassandra cluster

- CODE

# Run the following command as root on all persistence nodes, one at a time: bash /opt/veridiumid/cassandra/conf/cassandra_maintenance.sh -c /opt/veridiumid/cassandra/conf/maintenance.conf

3) ElasticSearch backup

This process will download the data existing in ElasticSearch and the settings for all indexes and save them on the disk.

| Details |

|---|---|

Script location | /opt/veridiumid/elasticsearch/bin/elasticsearch_backup.sh |

Configuration file | /opt/veridiumid/elasticsearch/bin/elasticsearch_backup.conf |

Cronjob default time | 15 0 * * * (Everyday at 00:15) |

Log file | /var/log/veridiumid/elasticsearch/backup.log |

Number of backups maintained | 10 (only for settings backups) |

Backup location | /opt/veridiumid/backup/elasticsearch/data → for ElasticSearch data /opt/veridiumid/backup/elasticsearch/settings → for ElasticSearch index settings |

Backup name | YYYY-MM-DD_HH-mm → for ElasticSearch index settings INDEX_NAME-YYYY-MM_DDHHmmss.esdb.tar.gz → for ElasticSearch Data |

Configuration file:

The configuration file contains the following:

# Debug enabled or not (1 or 0)

DEBUG=0

# Path to directory used for Data backups

BKP_DATA_DIR=/opt/veridiumid/backup/elasticsearch/data

# Path to directory used for Settings backups

BKP_SETTINGS_DIR=/opt/veridiumid/backup/elasticsearch/settings

# Limit of settings backups

LIMIT=10

# Export page size

EXPORT_PAGE_SIZE=5000

# Request timeout in minutes

REQUEST_TIMEOUT=5

# Connection timeout in minutes

CONNECTION_TIMEOUT=3

# Number of parallel tasks

PARALLEL_TASKS=2Changing the number of maintained settings backups:

To change the number of maintained settings backup change the value of LIMIT in the configuration file.

Performing a backup:

Run the following command as root user to perform an ElasticSearch backup:

bash /opt/veridiumid/elasticsearch/bin/elasticsearch_backup.sh /opt/veridiumid/elasticsearch/bin/elasticsearch_backup.confRecover from backup:

Run the following command as root to recover from an ElasticSearch backup:

bash /opt/veridiumid/migration/bin/elk_ops.sh --restore --dir=BACKUP_PATH

# Where: BACKUP_PATH is the full path to the backup directory

# Settings restore

bash /opt/veridiumid/migration/bin/elk_ops.sh --restore --dir=/opt/veridiumid/backup/elasticsearch/settings/YYYY-MM-DD_HH-mm

# All Data restore

bash /opt/veridiumid/migration/bin/elk_ops.sh --restore --dir=/opt/veridiumid/backup/elasticsearch/data

# Restore specific index data

bash /opt/veridiumid/migration/bin/elk_ops.sh --restore --dir=/opt/veridiumid/backup/elasticsearch/data/INDEX_NAME-YYYY-MM_DDHHmmss.esdb.tar.gz3.1) Full ElasticSearch restoration

Please leave all services up, when starting the procedure.

1. On all persistence nodes run:

systemctl stop ver_elasticsearch

rm -rf /opt/veridiumid/elasticsearch/data/*

2. Edit file on all persistence nodes:

vim /opt/veridiumid/elasticsearch/config/elasticsearch.yml

uncomment line: cluster.initial_master_nodes:

3. On all persistence nodes:

systemctl start ver_elasticsearch

4. Go to websecadmin -> advanced -> elasticsearch.json and set "apiKey": ""

Do the same for websecadmin -> advanced -> kibana.json

5. On one webapp, take the password for kibana:

grep elasticsearch.password /etc/veridiumid/kibana/kibana.yml

6. on persistence, run below command, setting in KIBANA_PASSWORD the one taken from command 5:

eops -x=POST -p="/_security/user/kibana_system/_password" -d='{"password":"KIBANA_PASSWORD"}'

7. run on one node: /opt/veridiumid/migration/bin/elk_ops.sh --update-settings

8. check on which peristence node, elastic backup is running (here there should be files) and on that persistence node, run the restore command:

ls -lrt /opt/veridiumid/backup/elasticsearch/data/

bash /opt/veridiumid/migration/bin/elk_ops.sh --restore --dir=/opt/veridiumid/backup/elasticsearch/data/

9. run check_services and eops -l to see that the indices are created fine and all are green.4) Configuration backup

This process will make an archive containing all important configuration files for all VeridiumID services.

| Details |

|---|---|

Script location | /etc/veridiumid/scripts/backup_configs.sh |

Configuration file | /etc/veridiumid/scripts/backup_configs.conf |

Cronjob default time | 0 2 * * 6 (Saturdays at 02:00) |

Log file | /var/log/veridiumid/ops/backup_configs.log |

Number of backups maintained | Last 31 days worth of backups |

Backup location | /opt/veridiumid/backup/all_configs |

Backup name | IP_ADDR_YYYYMMDDHHmmss.tar.gz |

Configuration file:

The configuration file contains the list of files that will be backed up, in the following format: service_name:full_path:user:group

cassandra:/opt/veridiumid/cassandra/conf/cassandra-env.sh:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/cassandra_maintenance.sh:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/cassandra-rackdc.properties:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/cassandra.yaml:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/cqlsh_cassandra_cert.pem:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/cqlsh_cassandra_key.pem:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/jvm.options:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/jvm-server.options:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/KeyStore.jks:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/maintenance.conf:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/TrustStore.jks:ver_cassandra:veridiumid

cassandra:/opt/veridiumid/cassandra/conf/veridiumid_cqlshrc:ver_cassandra:veridiumid

elasticsearch:/opt/veridiumid/elasticsearch/config:veridiumid:veridiumid

fido:/opt/veridiumid/fido/conf/log4j-fido.xml:ver_fido:veridiumid

fido:/etc/default/veridiumid/ver_fido:ver_fido:veridiumid

haproxy:/opt/veridiumid/haproxy/conf/haproxy.cfg:ver_haproxy:veridiumid

haproxy:/opt/veridiumid/haproxy/conf/server.pem:ver_haproxy:veridiumid

haproxy:/opt/veridiumid/haproxy/conf/client-ca.pem:ver_haproxy:veridiumid

notifications:/etc/default/veridiumid/ver_notifications:ver_notifications:veridiumid

notifications:/opt/veridiumid/notifications/conf/log4j-notifications.xml:ver_notifications:veridiumid

selfservice:/etc/default/veridiumid/ver_selfservice:ver_selfservice:veridiumid

selfservice:/opt/veridiumid/selfservice/conf/log4j.xml:ver_selfservice:veridiumid

statistics:/etc/default/veridiumid/ver_statistics:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-auth-sessions-global.xml:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-auth-sessions-local.xml:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-dashboard-account-bioengines.xml:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-dashboard-sessions-global.xml:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-dashboard-account-sessions.xml:ver_statistics:veridiumid

statistics:/opt/veridiumid/statistics/conf/log4j-dashboard-sessions-local.xml:ver_statistics:veridiumid

tomcat:/opt/veridiumid/tomcat/bin/setenv.sh:ver_tomcat:veridiumid

tomcat:/opt/veridiumid/tomcat/certs:ver_tomcat:veridiumid

tomcat:/opt/veridiumid/tomcat/conf:ver_tomcat:veridiumid

zookeeper:/opt/veridiumid/zookeeper/conf/java.env:ver_zookeeper:veridiumid

zookeeper:/opt/veridiumid/zookeeper/conf/zoo.cfg:ver_zookeeper:veridiumid

zookeeper:/opt/veridiumid/zookeeper/data/myid:ver_zookeeper:veridiumid

data-retention:/etc/default/veridiumid/ver_data_retention:ver_data_retention:veridiumid

data-retention:/opt/veridiumid/data-retention/conf/log4j-data-retention.xml:ver_data_retention:veridiumid

freeradius:/opt/veridiumid/freeradius/etc/raddb/certs:ver_freeradius:veridiumid

freeradius:/opt/veridiumid/freeradius/etc/raddb/clients.conf:ver_freeradius:veridiumid

freeradius:/opt/veridiumid/freeradius/etc/raddb/sites-available/tls:ver_freeradius:veridiumid

kafka:/opt/veridiumid/kafka/config/certs:ver_kafka:veridiumid

kafka:/opt/veridiumid/kafka/config/server.properties:ver_kafka:veridiumid

opa:/opt/veridiumid/opa/certs:veridiumid:veridiumid

opa:/opt/veridiumid/opa/conf/opa.yaml:veridiumid:veridiumid

shibboleth-idp:/opt/veridiumid/shibboleth-idp/credentials:ver_tomcat:veridiumid

shibboleth-idp:/opt/veridiumid/shibboleth-idp/metadata:ver_tomcat:veridiumid

shibboleth-idp:/opt/veridiumid/shibboleth-idp/conf:ver_tomcat:veridiumid

shibboleth-idp:/opt/veridiumid/shibboleth-idp/edit-webapp:ver_tomcat:veridiumid

websecadmin:/etc/default/veridiumid/ver_websecadmin:ver_websecadmin:veridiumid

websecadmin:/opt/veridiumid/websecadmin/certs:ver_websecadmin:veridiumid

websecadmin:/opt/veridiumid/websecadmin/conf/log4j.xml:ver_websecadmin:veridiumid

migration:/opt/veridiumid/migration/conf/log4j.xml:veridiumid:veridiumid

system:/etc/hosts:root:root

system:/etc/sysctl.conf:root:root

system:/etc/security/limits.conf:root:root

ca:/opt/veridiumid/CA:veridiumid:veridiumidChanging the number of maintained backups:

The value is hardcoded in the /etc/veridiumid/scripts/backup_configs.sh script. To modify it change the following line:

line 127: find ${VERIDIUM_DIR}/backup/all_configs -mtime +31 -exec rm -rf {} \;And change 31 with the number of days you wish to keep backups.

Performing a backup:

Run the following command as root user to perform an Configuration backup:

bash /etc/veridiumid/scripts/backup_configs.sh /etc/veridiumid/scripts/backup_configs.confRecover from backup:

Run the following command as root to recover from an Configuration backup:

Stop all VeridiumID services

- CODE

# Connect to all nodes and use the following command as root, start with webapps and then persistence nodes: bash /etc/veridiumid/scripts/veridium_services.sh stop

Revert configuration files

- CODE

# Connect to all nodes and run the following command as root: bash /etc/veridiumid/scripts/config_revert.sh -c /etc/veridiumid/scripts/backup_configs.conf -b /opt/veridiumid/backup/all_configs/IP_ADDR_YYYYMMDDHHmmss.tar.gz

Start all VeridiumID services

- CODE

# Connect to all nodes and use the following command as root, start with persistence and then webapps nodes: bash /etc/veridiumid/scripts/veridium_services.sh start