I. Architecture

System Integration:

.png?inst-v=1bd3bb29-c7bd-4b60-813b-36fac217e1c4)

Overview:

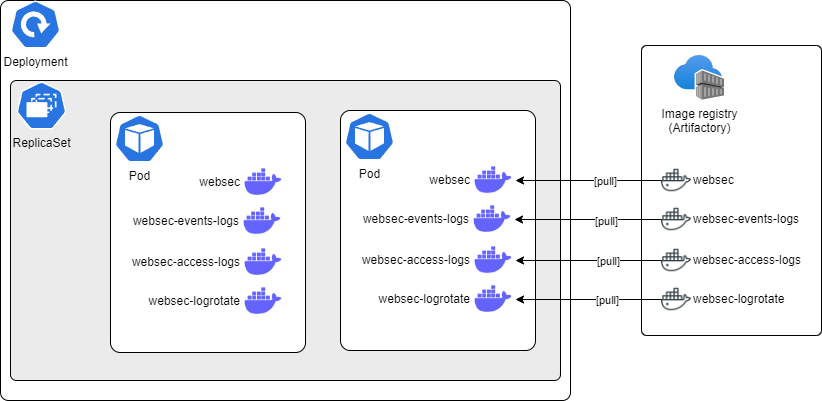

Example of a pod and its deployment (websec):

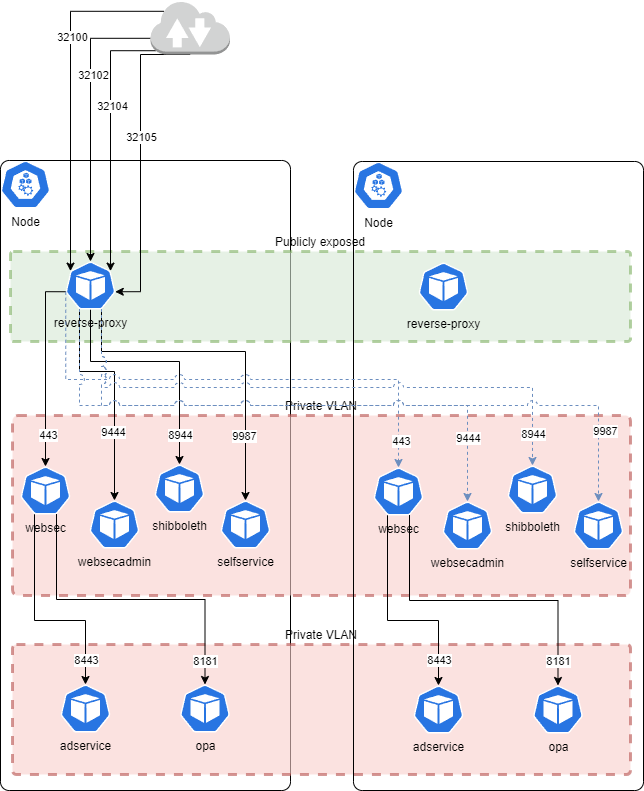

Networking overview:

Definitions:

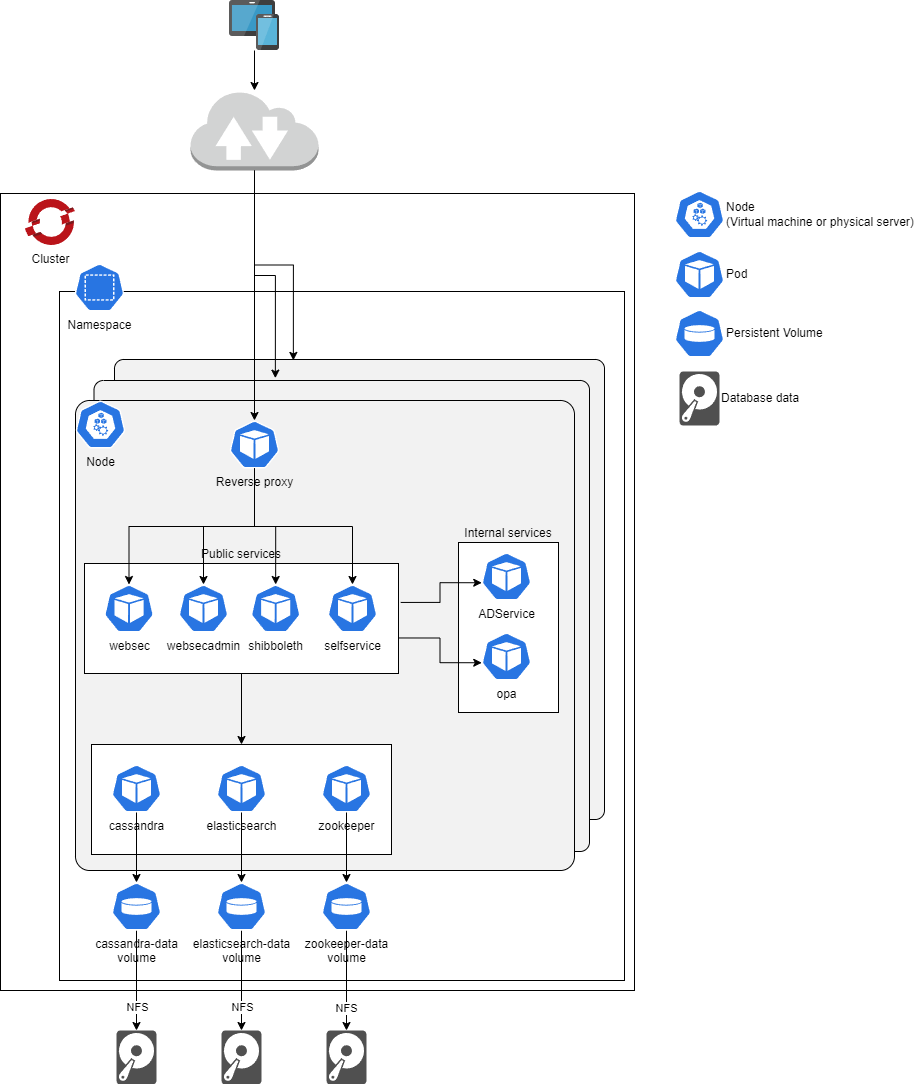

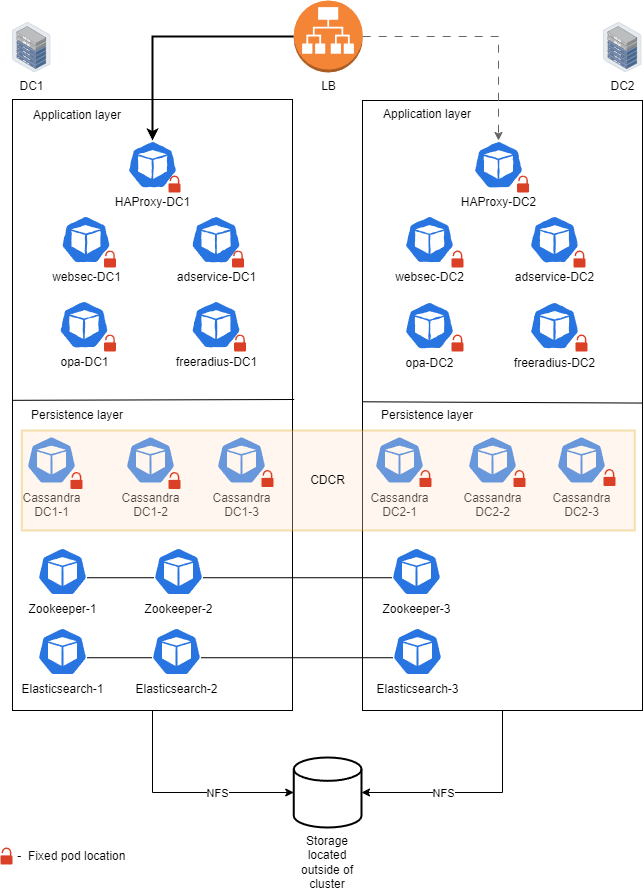

In our Kubernetes/OpenShift-deployed application, we have designed a robust and scalable infrastructure to manage the various subsystems, which include websec, websecadmin, shibboleth, cassandra, and zookeeper. To achieve this, we leverage Kubernetes/OpenShift entities such as Pods, Deployments, StatefulSets, Services, and Ingress resources. Let's break down how these entities overlay our deployed application:

Pods:

In Kubernetes/OpenShift, a Pod is the smallest deployable unit. In other words, if you need to run a single container in Kubernetes/OpenShift, then you need to create a Pod for that container. At the same time, a Pod can contain more than one container, if these containers are relatively tightly coupled. In our application, each subsystem, such as websec, websecadmin, shibboleth, cassandra, and zookeeper, runs as one or more Pods.

Pods are ephemeral and can be created, scaled, and replaced easily. They encapsulate the application's code, dependencies, and storage resources.

Deployment and StatefulSet:

We use both Deployments and StatefulSets to manage the Pods depending on the specific requirements of each subsystem.

Deployment: For subsystems where instances can be scaled horizontally, we use Deployments. Deployments ensure that a specified number of Pod replicas are running at all times, making it suitable for stateless services like web servers.

StatefulSet: For subsystems that require stable and unique network identities or persistent storage, we use StatefulSets. StatefulSets provide ordered, persistent deployment of Pods and are ideal for stateful applications like databases (e.g., Cassandra) or when you need predictable naming.

Namespace:

We've organized all these Pods, Deployments, and StatefulSets within the same namespace to ensure proper separation and isolation. A Kubernetes/OpenShift namespace is a logical boundary that provides a scope for resources, helping in resource management and access control.

Services:

To make our subsystems accessible to other components within the Kubernetes/OpenShift cluster, we expose them as Services. A Service provides a stable, network-resolvable endpoint to access one or more Pods.

For instance, if our websec subsystem has multiple Pods, a Service associated with it ensures that requests are load-balanced among these Pods, abstracting the individual Pod IPs.

Services allow subsystems to communicate with each other within the cluster using the Service's DNS name.

Ingress:

To make specific services available outside the Kubernetes/OpenShift cluster, we utilize an HAProxy ingress. An Ingress resource defines rules for routing external traffic to Services inside the cluster.

The HAProxy ingress provides load balancing and manages external access to our services. It can route traffic based on hostname or path, and it enables the exposure of required services to the external world, typically through a publicly accessible IP address.

In summary, our Kubernetes/OpenShift-deployed application effectively utilizes Pods, Deployments, StatefulSets, Namespaces, Services, and an HAProxy Ingress to manage, orchestrate, and expose various subsystems, ensuring scalability, resilience, and proper isolation within the Kubernetes/OpenShift cluster, while also facilitating external access to essential services.

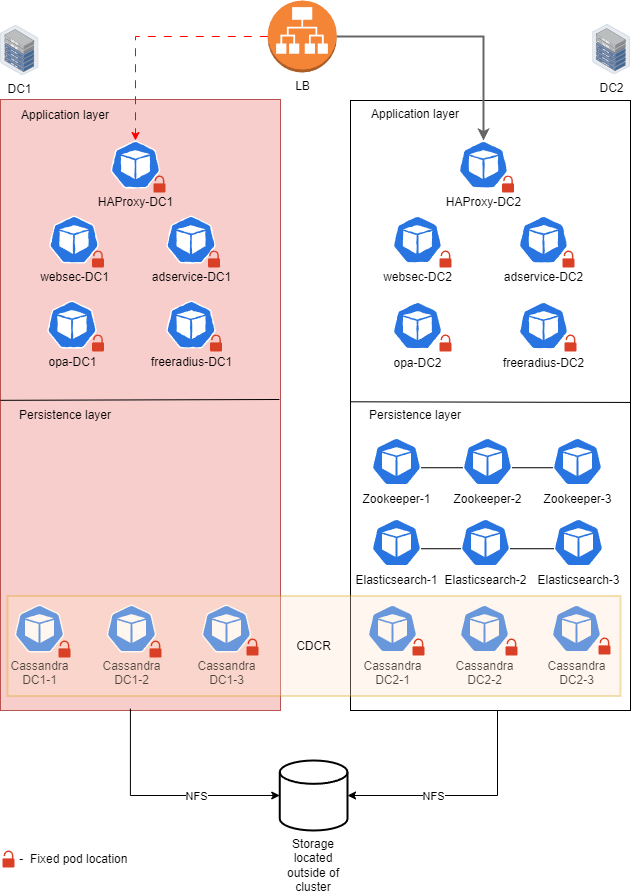

CDCR Implementation

a. Normal operation (when both datacenters are up)

Replica pods or the application are distributed evenly across both datacenters.

Zookeeper and Elasticsearch pods can run in any arbitrary location.

Cassandra runs in its native CDCR configuration utilizing dedicated pods in each datacenter.

b. Datacenter goes offline (for example, DC1)

Traffic is routed only to application pods in DC2 that are already running, with no downtime.

Zookeeper and Elasticsearch pods from DC1 are recreated in DC2 so that quorum is restored.